For as long as Android has been around, the many manufacturers that adopted the platform have been at it, throwing everything they have to outdo each other and hopefully win over new fans. In order to differentiate their products and not just be ‘yet another Android maker’, those same companies have resorted to tweaking (sometimes) the look and function of the underlying software, all the while continuously betting on the very best available in hardware.

Looking back, it’s not hard to imagine where the “more is necessarily better” mentality came from. We’ve been essentially conditioned to think that way. So, in a way, for your average Joe an octa-core processor is better than a quad-core one, 4GB of RAM is more desirable than 3GB of RAM, and a 20-megapixel camera beats a 13-megapixel one. Only, that’s not at all the case—at least not necessarily.

Which is why Samsung’s move to a 12-megapixel camera with the Galaxy S7, down from a 16-megapixel unit with the S6, is defendable—at least on paper (and from what we’ve seen in practice). And while so far we’ve been highlighting other notable improvements it brings to the table, such as larger sensor and pixel size, along with wider lens, we wanted to address one concern that some folks have: level of detail, or detail depth if you will.

In short, within the next few paragraphs, we’ll try and explain why despite the lower resolution, the images you’ll be getting from the Galaxy S7 will be of comparably high definition as those from the supposedly superior (in this particular area) sensor of the Galaxy S6.

Nobody likes disclaimers, but when we’re shooting for a semi-scientific, concrete proof of our claims, it pays to lay the ground rules. And in this case, these are very easy to mess up. But why?

Well, for starters, because we’re looking at two different sensors, with different optics, and both are powered by at least marginally different camera software algorithms. This means that even if the depth of detail is essentially on par, things such as overzealous noise suppression algorithms can fool viewers into believing that one is superior to the other based on something like the clarity of details.

However, by detail depth here, we simply pose the following question: is more visual information offered by the 16-megapixel sensor of the Galaxy S6 when compared to the 12-megapixel snapper of the Galaxy S7?

As in, if we zoom in at 500% into an image of a billboard that is 100 meters away, will we be able to better read the fine print? If we stray from this definition, we risk misjudging the real reason for the seemingly inferior performance, which can range from inferior optics, through human error, and down to different software algorithms—not resolving power.

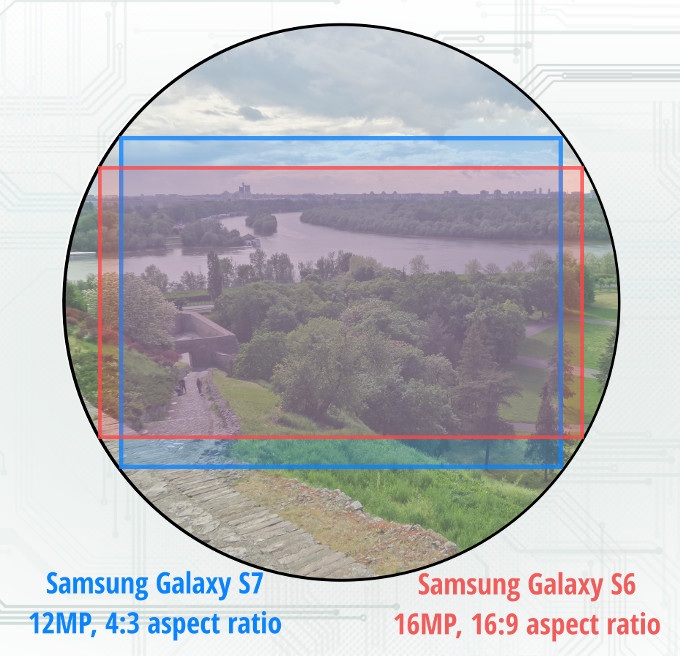

With this in mind, we’re ready to explore and explain why, in reality, you can expect the same level of detail from both cameras. So what sorcery allows the 12-megapixel unit of the Galaxy S7 to match the 16-megapixel snapper of the Galaxy S6 in terms of detail depth? It’s actually exceedingly simple, though you might need a few seconds to wrap your head around it: the S7 has a 4:3 aspect ratio sensor, while the Galaxy S6’s is a 16:9 unit. To aid your understanding, we offer you a visual representation of what that means:

In this instance, the outlined black circle is the camera lens that allows for light to enter and illuminate the sensor, while the blue and red rectangles are a representations of the physical form of the two phone’s respective camera sensors. As you can see, for the most part, the two are overlapped, with the exception of two slices on the top/bottom and left/right sides.

And that’s exactly the point: those extra 4 megapixels you get with the Galaxy S6 are distributed on the left and right, or horizontally, giving you a wider view of the composition in front of you. They don’t mean that you’re getting better detail at any point within the overlapping area, as, physically, the number of individual pixels within is identical.

In a simpler world, if all else was constant (lens quality, software, focus, etc.), we’d have identical detail available at, say, the very center of the image. Since we have two differing devices, with two very different sensors, we thought we’d go ahead and give you real world examples of what we mean.

Jump into the gallery below, where we’ve sliced a number of stills taken with both the Galaxy S7 and S6, under identical conditions. As you’ll quickly find out, there’s no loss of detail despite the lower resolution camera of the new flagship—you just get 4 megapixels worth of information on in width with the Galaxy S6.

Frank Tyler is the brilliant wordsmith behind the captivating world of Samsung's Android marvels. As the Editor in Chief at Samsung Fan Club, he wields his pen like a magic wand, weaving enchanting tales of innovation, cutting-edge technology, and the artistry that is Samsung's Android smartphones. With a deep passion for all things Samsung, Frank's prose dances with the rhythms of innovation, bringing the latest in Android excellence to life for tech enthusiasts worldwide. Join him on a journey through the digital cosmos as he unravels the mysteries and delights of Samsung's ever-evolving Android universe.

Latest posts by Frank Tyler

(see all)